Big O Notation Part 2

In the previous article (part 1), We learnt the basics of asymptotic notation and its type. In this post we will learn how to calculate Big O notation of an algorithm.

Calculating Big O

When it comes to finding Big O notation of an algorithm we should keep following rules in mind.

1) Drop the constants :- Since we are trying to do worst case analysis, We can drop the constants as we only care about the growth rate of the algorithm as input size n increases. Constants are insignificant as compared to the input size.

2) Drop the non-dominant term :- Dominant term is the term that increases most quickly as the size of the input increases. Similarly, like dropping the constant, We can also remove non-dominant term, Since non-dominant terms does not play a significant role in the approximation. For example -

f(n) = n2 + 5n + 200

In the above expression we can drop 5n + 200, as n2 is more significant.

3) In If-Else, Include the block having maximum complexity :- As mentioned earlier, We are interested in the worst case. So when calculating Big O, we should choose the block having maximum complexity.

4) Runtime of loop block = runtime of statement(s) * number of iteration

By using the above mentioned rules we can calculate Big O of an algorithm.

Examples :-

Let us dig deeper with some examples. For the sake of simplicity, From now on we will assume each operation or step takes 1 unit of time to execute.

Example 1 - Constant Time or O(1) :-

function add(a, b) {

let sum = a + b; // 1 unit of time

return sum; // 1 unit of time

}The above is a simple function that takes 2 arguments, adds them and returns the result. Assuming each step takes 1 unit of time to execute, Total runtime of the above function can be expressed as :-

f(n) = 1 + 1 = 2

No matter what is the size of the input, The above function will always take 2 unit of time to execute. Since execution time is constant and independent of input size, Hence we can say Big O of f(n) is O(1).

Example 2 Linear or O(n) :-

/* funtion to add array elements of size n. */

function addArrayElements(arr) {

let sum = 0; // 1

for (i=0; i<arr.length; i++) {

sum = sum + arr[i]; // 1, this opertion will run n times

}

return sum; // 1

}Assuming the size of array to be n, The total execution time for the above function can be expressed as :-

f(n) = 1 + n + 1 = n + 2

The execution time of the above function depends upon the size of input. As the input size increases execution time increases. Using rule 1, We can drop the constants, Hence, Big O of f(n) is O(n).

Example 3 :-

/* function takes 2 arrays as input and returns the sum of

all its elements */

function add2ArraysElements(arr1, arr2) {

let sum = 0; // 1

for (i=0; i<arr1.length; i++) {

sum = sum + arr1[i]; // 1, this opertion will run n times

}

for (i=0; i<arr2.length; i++) {

sum = sum + arr2[i]; // 1, this opertion will run m times

}

return sum; // 1

}Assuming the size of arrays to be n and m, The total execution time for the above function can be expressed as :-

f(n) = 1 + n + m + 1 = n + m + 2

The execution time of the above function depends upon the size of the both input arrays. As the input size increases execution time increases. Using rule 1, We can drop the constants, Hence, Big O of f(n) is O(n+m).

Example 4:-

function addMatrixElements(mat, rows, columns) {

let sumFirstRow = 0; // 1

for (i=0; i<rows; i++) {

sumFirstRow = sumFirstRow + mat[0][i]; // 1

}

let totalSum = 0; // 1

for (i=0; i<rows; i++) {

for(j=0; j<columns;j++) {

totalSum = totalSum + mat[i][j]; // 1

}

}

return sum; // 1

}The above function takes 2 dimensional array and size of row and column as input. Assuming the size of row and column to be n and m, The total execution time for the above function can be expressed as :-

f(n) = 1 + n + 1 + nm + 1 = n + nm + 3

On removing non dominant term and constants, Big O of f(n) is O(n*m).

Example 5 :-

function addArrayElements(arr, arr2) {

let sum = 0; // 1

for (i=0; i<arr.length/2; i++) {

sum = sum + arr1[i]; // 1, this opertion will run n/2 times

}

}The above function takes an array and finds sum of first half of its elements. The total execution time for the above function can be expressed as :-

f(n) = 1 + n/2

On removing non dominant term and constants, Big O of f(n) is O(n). Here, We removed the coefficient 1/2, Because as compared to n, 1/2 is insignificant and does not effects worst case time.

Example 6 - Quadratic Complexity or O(n2) :-

function bubbleSort(arr) {

for (i=0; i<arr.length; i++) {

for(j=0; j<arr.length;j++) {

if (arr[j] > arr[j+1]) {

// swapping elements

int temp = arr[j] // 1

arr[j] = arr[j+1] // 1

arr[j+1] = temp // 1

}

}

}

}Above is an example of bubble sort. Assuming that the if condition is true every time (worst case), Total execution time will be :-

f(n) = 3n2 f(n) = O(n2) // On removing non dominant term and constants,

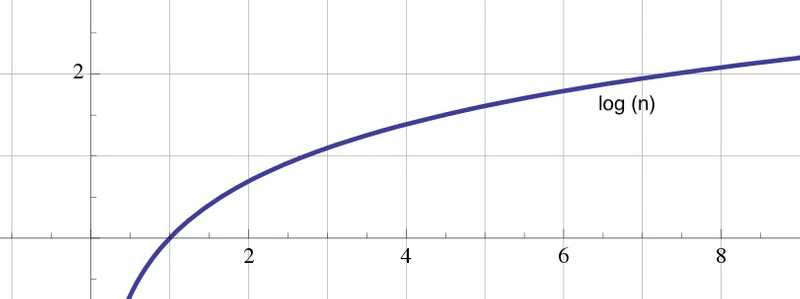

Example 7 - Logarithmic Complexity or O(log n) :-

function printElements(n) {

for (i=1; i<=n; i = i*2) {

console.log(i); // 1

}

}In the above example, Loop variable i is increased by 2 times at every loop. Now, Let’s assume n is 5, So the output of above function will be

1, 2, 4 // output f(n) = 3 // n = 5

Now, let’s assume n is 10, So the output will be

1, 2, 4, 8 f(n) = 4 // n = 10

As you can see from above, The time complexity does not grow as significantly as input size n. We say, Time complexity is O(log n) if loop variable is divided or multiplied by a constant. In other words, If after each iteration the work required is halved (or some how smaller), We say complexity is Logarithmic.

Most common example of Logarithmic complexity is Binary Search Algorithm

Example 8 :-

function factorial(n) {

var fact = 1;

if (n == 0) {

return 0;

} else {

for (i=1; i<=n; i++) {

fact = fact * i;

}

return fact;

}

}In the above example, else bock has the maximum complexity. Since the loop runs n times, Big O of f(n) is O(n).

Other Common Time Complexities

Cubic O(n3) :- This complexity occurs when we run 3 nested loop, each n times.

for(i=0; i<n; i++) {

for(j=0; j<n; j++) {

for(k=0; k<n; k++) {

print(k);

}

}

}In the above example, print statement will run n3, So the Big O is O(n3)

Linearithmic O(n log n) :- When an algorithm runs Logarithmic operations n times, We say it has a linearithmic complexity or O(n log n). Merge sort is a popular example of linearithmic complexity.

Exponential O(2n) :- This complexity occurs in algorithms where input size n, If incremented by even 1, Will double the processing required, For example Brute force algorithms